Hadoop/HDFS Commands:

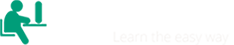

To use Hadoop commands, you need to first make sure that your Hadoop services are up and running. If you haven’t installed Hadoop yet check out this post.

To start Hadoop services, use the following command:

$ start-all.shHow to check Hadoop services are up and running, use the below command:

$ jps

So, let’s start with basics commands:

-

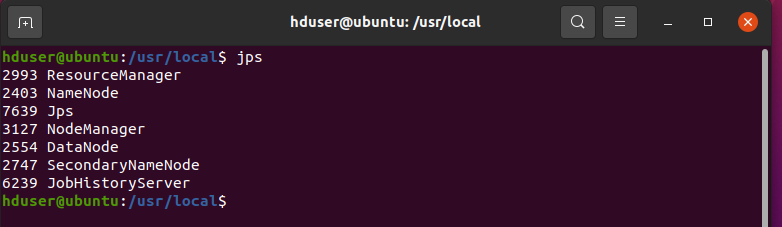

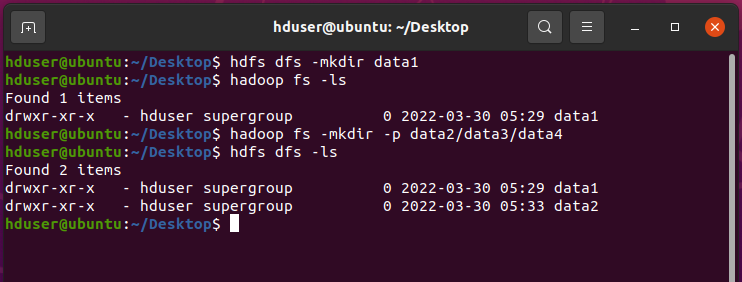

Create a directory in HDFS.

Syntax-

$ hdfs dfs -mkdirExample-

$ hadoop fs -mkdir data1 --- Old approach in hadoop 1.x $ hdfs dfs -mkdir data11 --- New approach in hadoop 2.x $ hadoop fs -mkdir -p data2/data3/data4 --- Folder created RecursivelyNote: -p: It creates an inner and outer folder (first creates data2 folder then inside data3 folder and again creates data4 folder inside data3)

-

-ls command.

-ls command is used to list files out of the directory in HDFS:

Syntax-

$ hdfs dfs -lsExample 1-

$ hdfs dfs -ls

Example 2 with path-

$ hadoop fs -ls data2/data3 Found 1 items drwxr-xr-x - hduser supergroup 0 2022-03-30 05:33 data2/data3/data4 -

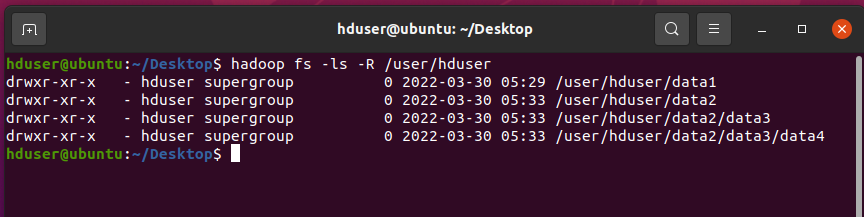

Display files and folders Recursively.

Example-$ hadoop fs -ls -R /user/hduser drwxr-xr-x - hduser supergroup 0 2022-03-30 05:29 /user/hduser/data1 drwxr-xr-x - hduser supergroup 0 2022-03-30 05:33 /user/hduser/data2 drwxr-xr-x - hduser supergroup 0 2022-03-30 05:33 /user/hduser/data2/data3 drwxr-xr-x - hduser supergroup 0 2022-03-30 05:33 /user/hduser/data2/data3/data4-R: is used to display Recursively.

-

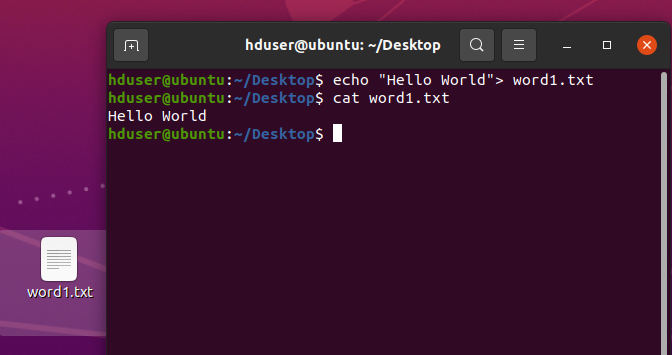

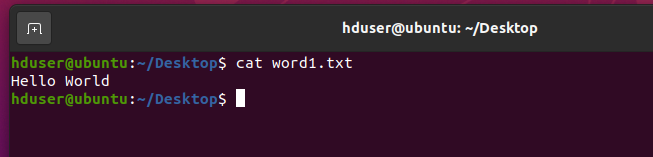

Create a file called “word1.txt” in “/home/hduser/” with some data.

$ echo "Hello World"> word1.txt $ cat word1.txt Hello World

-

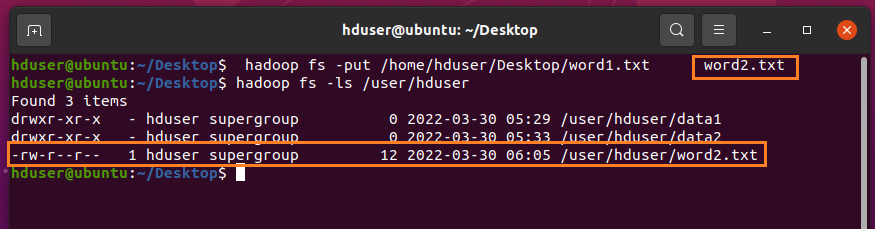

Copy Single & Multiple File From Local To HDFS.

This command is very useful in Hadoop. There are 2-ways to use the command i.e.,“-put” and “-copyFromLocal”.

Syntax-

$ hdfs dfs -put local path hdfs path $ hdfs dfs -copyFromLocal local path hdfs pathExample-

We will copy the above file "word1.txt" to HDFS.

$ hadoop fs -put /home/hduser/Desktop/word1.txt word2.txt $ hadoop fs -ls /user/hduser -- word2.txt is stored in HDFS

Example Multiple File-

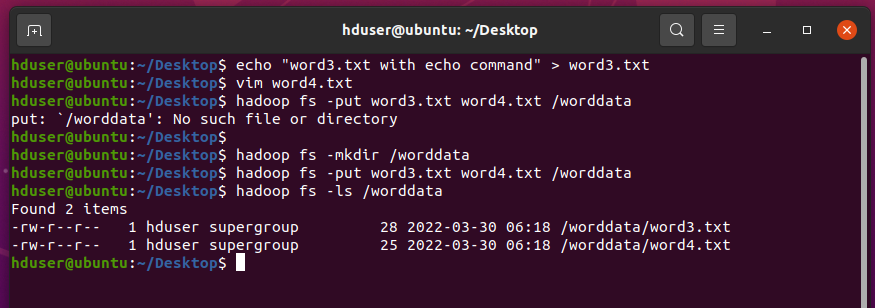

Create a file with some data:echo "word3.txt with echo command" > word3.txt vim word4.txt -- put some data and save it. // Create a Folder $ hadoop fs -mkdir /worddata // Copy word3.txt & word4.txt files to worddata folder $ hadoop fs -put word3.txt word4.txt /worddata

-

cat command.

"cat" command is used to display the content of the file.

$ cat word1.txt Hello World --- Display the content

-

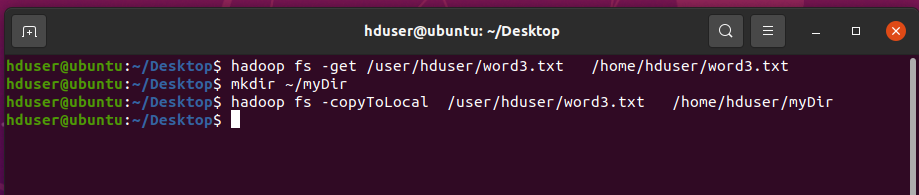

-get (or) -copyToLocal:

This command copies data or folders from the HDFS location to the Local files system.Syntax-

$ hadoop fs -get hdfs_file_path local_file_path (OR) $ hadoop fs -copyToLocal local_file_path hdfs_file_pathExample-

// copy word3.txt from HDFS location to Local location $ hadoop fs -get /user/hduser/word3.txt /home/hduser/word3.txt // Create a directory. $ mkdir ~/myDir // copy word3.txt file to myDir folder present in local file system. $ hadoop fs -copyToLocal /user/hduser/word3.txt /home/hduser/myDir

-

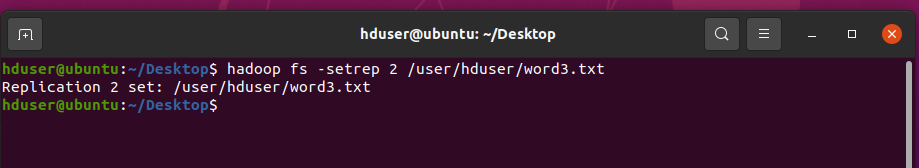

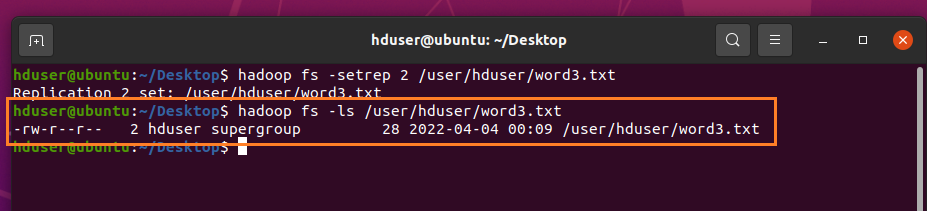

Setting Replication on a particular file.

-setrep is a command to set the replication factor of a file(s)/directory in HDFS. By default, it is 3.

Example-

// setting 2 replica on word3.txt file. $ hadoop fs -setrep 2 /user/hduser/word3.txt

Now use -ls to check

$ hadoop fs -ls /user/hduser/word3.txt

-

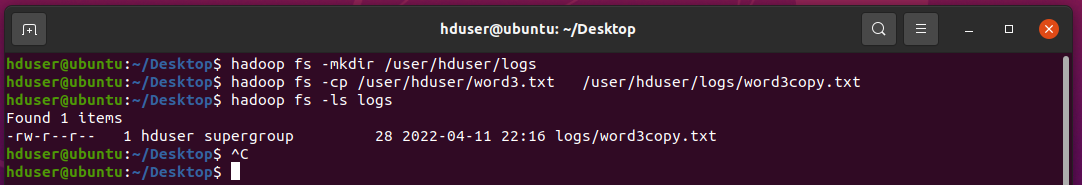

-cp command:

“-cp” command can be used to copy the source into the target within the HDFS cluster. It can also be used to copy multiple files.

The below example shows to copy a file from the HDFS location to another directory location.

Syntax-

$ hdfs dfs -cp (hdfs source) (hdfs dest.)Example-

// Create a folder called logs in hduser. $ hadoop fs -mkdir /user/hduser/logs // copy word3.txt to logs folder as word3copy.txt $ hadoop fs -cp /user/hduser/word3.txt /user/hduser/logs/word3copy.txt $ hadoop fs -ls logs Found 1 items -rw-r--r-- 1 hduser supergroup 28 2022-04-11 22:16 logs/word3copy.txt

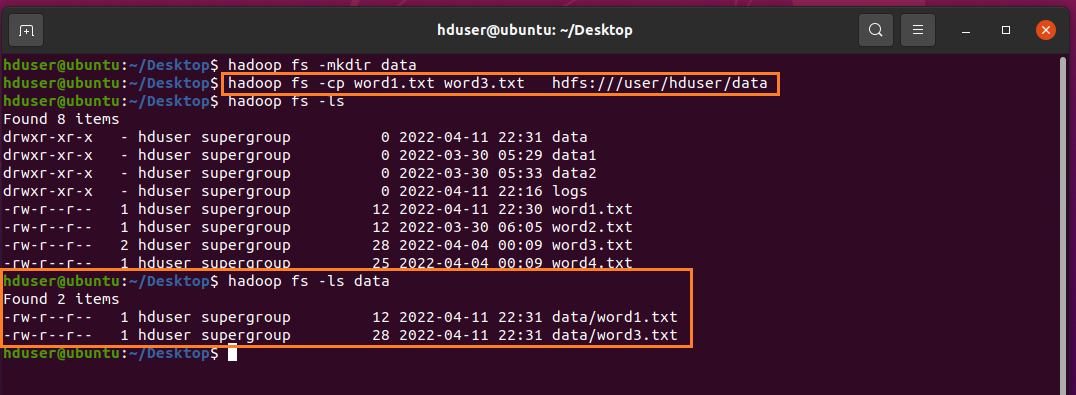

Now, to copy multiple files into a single target directory. The target directory must exist. Below is an example.

// Create a folder called data in hdfs $ hadoop fs -mkdir data // copy word1.txt and word3.txt to data folder. $ hadoop fs -cp word1.txt word3.txt hdfs:///user/hduser/data $ hadoop fs -ls data Found 2 items -rw-r--r-- 1 hduser supergroup 12 2022-04-11 22:31 data/word1.txt -rw-r--r-- 1 hduser supergroup 28 2022-04-11 22:31 data/word3.txt // copy all the files starting with “word”. $ hadoop fs -cp word*.txt hdfs:///user/hduser/data

-

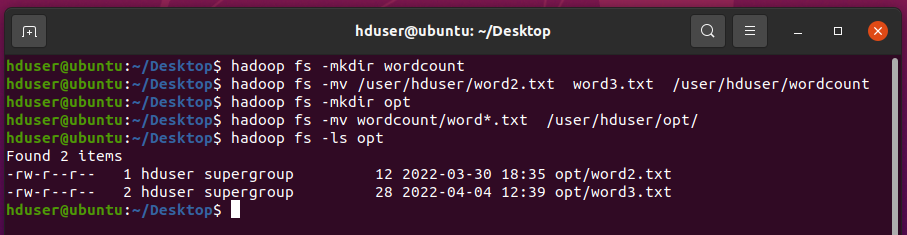

-mv command:

It moves the files from source HDFS to destination HDFS. It can also be used to move multiple source files into the target directory that exists.

// Create a folder called “wordcount” in HDFS. $ hadoop fs -mkdir wordcount // move word2.txt & word3.txt inside wordcount folder. $ hadoop fs -mv /user/hduser/word2.txt word3.txt /user/hduser/wordcount // Create another folder called “opt” in HDFS. $ hadoop fs -mkdir opt // Move all the files that starts with the word “word” to “opt” folder. $ hadoop fs -mv wordcount/word*.txt /user/hduser/opt/ $ hadoop fs -ls opt Found 2 items -rw-r--r-- 1 hduser supergroup 12 2022-03-30 18:35 opt/word2.txt -rw-r--r-- 2 hduser supergroup 28 2022-04-04 12:39 opt/word3.txt

-

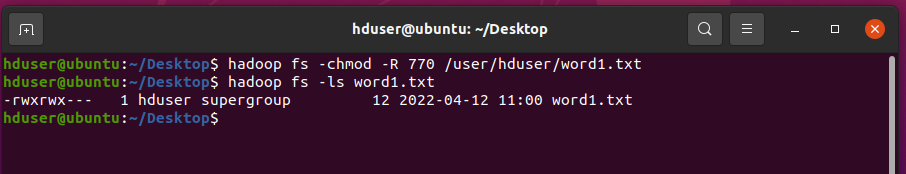

-chmod command:

hdfs dfs “-chmod” command is used to change the permissions of files. The “-R” option can be used to recursively change the permissions of a directory structure.

Syntax-

Example-$ hadoop fs -chmod [-R] mode | octal mode file or directory name$ hadoop fs -chmod -R 770 /user/hduser/word1.txt $ hadoop fs -ls word1.txt -rwxrwx--- 1 hduser supergroup 12 2022-04-12 11:00 word1.txt

-

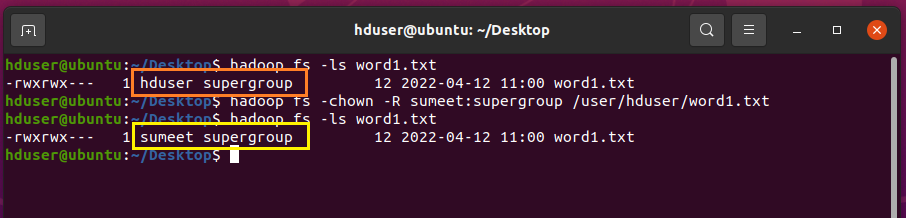

-chown command:

hadoop fs “-chown” command is used to change the ownership of the files. The -R option can be used to recursively change the owner of a directory structure.

Syntax-

Example-hadoop fs -chown [-R] NewOwnerName [:NewGroupName] file or directory$ hadoop fs -ls word1.txt -rwxrwx--- 1 hduser supergroup 12 2022-04-12 11:00 word1.txt // Changing ownership of word1.txt file. $ hadoop fs -chown -R sumeet:supergroup /user/hduser/word1.txt $ hadoop fs -ls word1.txt -rwxrwx--- 1 sumeet supergroup 12 2022-04-12 11:00 word1.txt